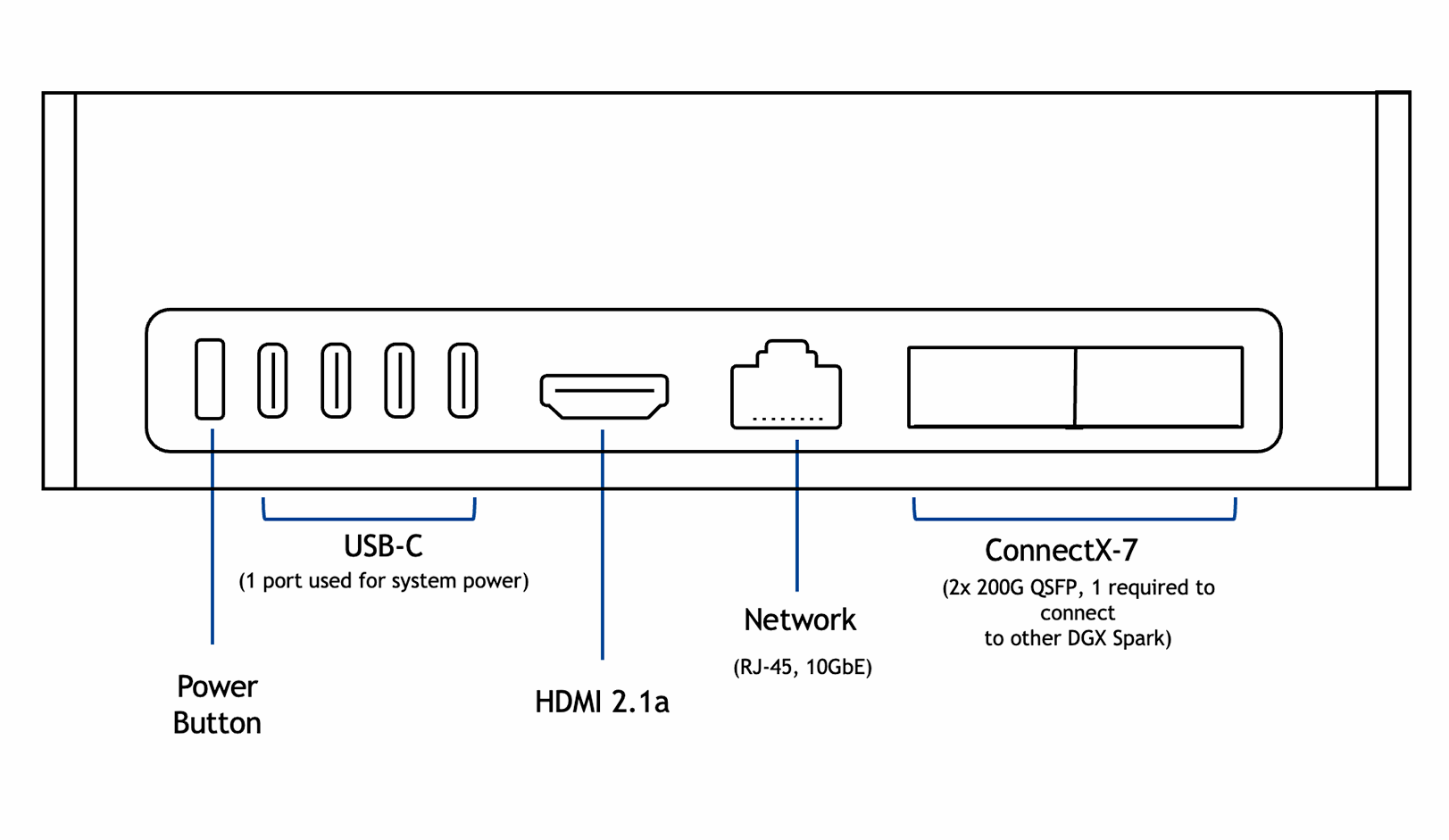

NVIDIA DGX Spark — data center class AI at your desk

A compact personal AI supercomputer powered by the NVIDIA Grace Blackwell architecture — your gateway to the Comino AI ecosystem.

Suitable for working with models of approximately up to 200 billion parameters locally, with an option to scale to around 405 billion parameters by connecting two systems.

.png)

.webp)

.jpeg)